Alessandro Rudi’s ERC Starting Grant: re-inventing machine learning!

Date:

Changed on 05/07/2021

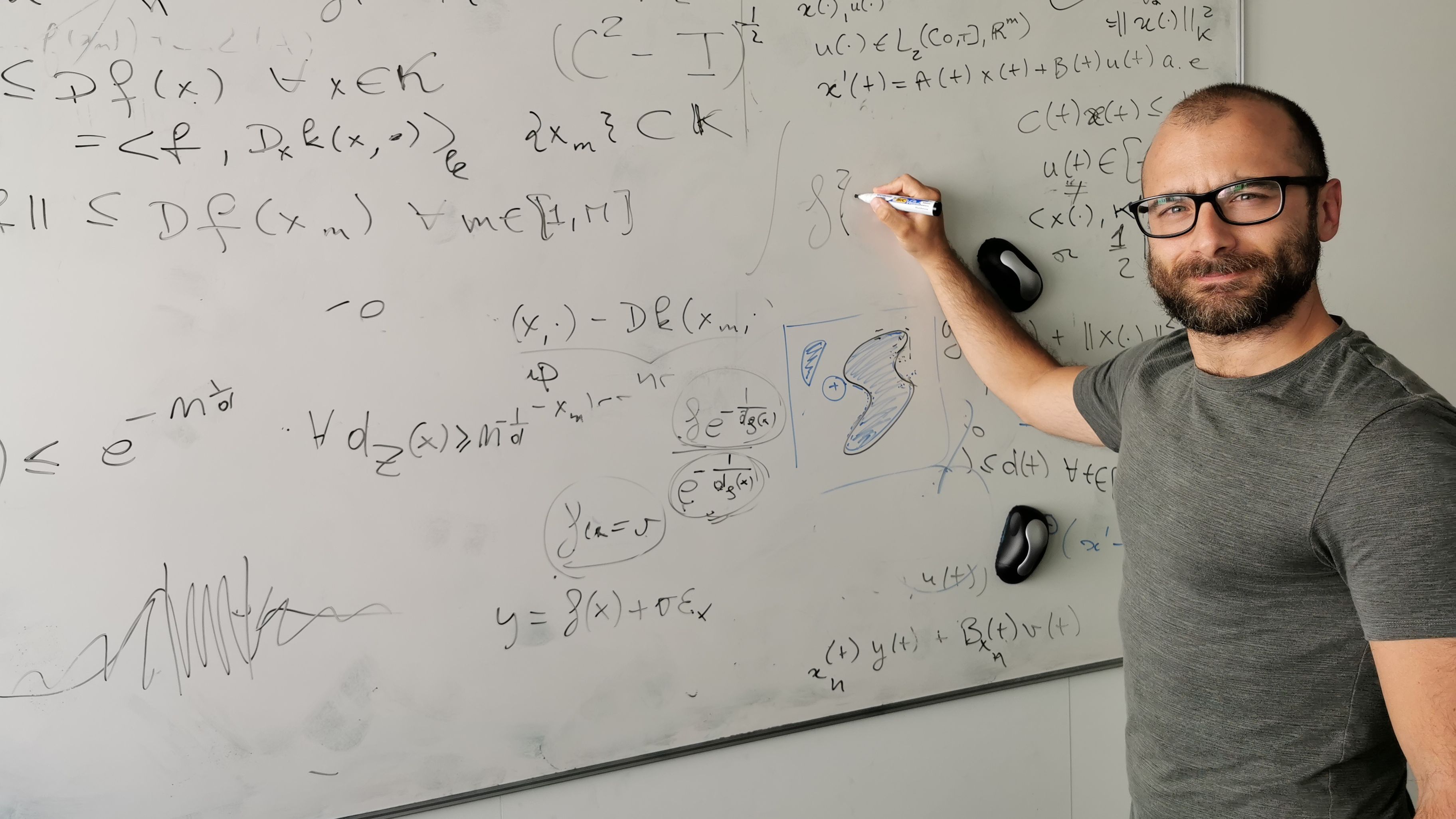

Alessandro Rudi (Inria Paris & École Normale Supérieure) is being awarded an ERC Starting Grant for 5 years and getting 1.5 million euros to build a new project team within the Inria Sierra team (Sidebar #1).

His goal? To change Machine Learning (ML) and to make it both more reliable and less greedy for computing time.

ML has become a fundamental tool to analyze large amount of data and “predict” future events from them: thanks to computational tools, learning programs are able to refine a given mathematical law that matches a set of labeled data (learning data) and afterwards deal with new data to give a striking insight of their evolution and/or distribution. It is then obvious that the more learning data that an algorithm is provided with, the more it will be “prepared” to predict a new data.

Everyone can remember that ML has publicly proved its efficiency in learning how to play Go or Chess and winning games against humans. But this kind of resounding victories are based on huge computing resources and learning time, with a cost of millions of euros per day. Actually, ML algorithms are now crucial building blocks in complex systems for decision making, engineering and science, but regular ML tools are either very expensive or not entirely trustworthy because of the way the learning step occurs: the learning data are usually very noisy and you will need a lot of those data because statistically, the precision grows like the square root of n, where n is the number of data. To overcome computational issues, current methods trade accuracy of the prediction with the efficiency of the procedure but the result is that the corresponding predictions usually do not come with guarantees on their validity.

However, the impact of ML outcomes has increased in the last decade and ML algorithms are largely used despite two main shortcomings: they lack both cost-effectiveness – which impacts directly the economic/energetic costs of large scale ML and makes them barely affordable by universities or research institutes – and reliability of the predictions which affects critically the safety of the systems where ML is employed. ML based tools have indeed reached a blocking point: the amount of data is growing much faster than the computational resources (post Moore's law era*) and the algorithms are actually like “black boxes” from which one can have an answer without knowing how reliable it is… Besides, ML cannot be applied to fields in which data may be scarce like scientific analysis.

To face these issues, Alessandro Rudi is awarded an ERC Starting Grant within the Sierra team and the young researcher is prepared for this big challenge: his research at Inria on “efficient and structured large scale machine learning with statistical guarantees” pave the way for a new era in ML. He already proved his expertise in accurate and cost-effective machine learning algorithms certified by the theory with many peer reviewed papers in the most important journals and international conferences of the field, as NeurIPS, and FALKON a solid library in python for multi-GPU architectures, that it is able to process datasets of billions of examples in minutes on standard workstations.

The keystone is to change the very way ML algorithms are build and to use fundamental mathematics to provide a more efficient and reliable learning models. It is indeed possible to mathematically reduce the numbers of parameters which are worth keeping for the same accuracy and to use models with less parameters but same accuracy. Furthermore, it is then possible to precisely calculate the uncertainty of the results provided by the algorithms – ie to get predictions endowed with explicit bounds on their uncertainty guaranteed by the theory. Furthermore, these algorithms will be naturally adaptive to the new architectures and will provably – ie with a proof – achieve the desired reliability and accuracy level by using minimum possible computational resources: for now, first results allow them, on a mere laptop, to deal with 109 data, which takes a thousand computers for regular ML algorithms. The way the fund will be used is then obvious: “hiring people and buy almost no computers!”.

Thanks to this ERC Starting Grant, Alessandro Rudi will be able to develop methods and techniques which will help machine learning to become a safe, effective, and fundamental tool in science and engineering for large scale data problems. The algorithms resulting from REAL will be released as open-source libraries for distributed and multi-GPU settings. Their effectiveness will be extensively tested on key benchmarks from computer vision, natural language processing, audio processing, and bioinformatics.

*The Moore's law (1975) posited a doubling every two years in the number of components per integrated circuit (and therefore in computational abilities). However, semiconductor advancement has slowed since around 2010, below the pace predicted by Moore's law.

The ambition of REAL is articulated in 5 main challenges: