ARPANET is now 50 years old

Date:

Changed on 23/09/2020

I became a contributor to the ARPANET project when TCP/IP was being defined, with an interest in the problems of synchronisation and flow control in end-to-end protocols.

Starting in 1972, my work was initially focused on the first protocols designed for the ARPANET (the NCP protocol) and for the Cyclades network. I had joined the Cyclades project to create a team in Rennes (Brittany, France), where I could take advantage of a well-organised computer center. I decided on a specific work program after learning that a Simula-67 compiler was available. What about developing an event-based simulator aimed at revealing the causes of those malfunctions exhibited by the early Cyclades protocols – inspired by NCP to some extent?

That turned out to be a good idea. Simula-67 happened to be the first object-ori-ented language ever created, and programming in Simula-67 was incredibly pleasant and efficient. Simulations were focused on the transport layer (layer 4), while on the ARPANET side, they were studying the problems on layers 3 and 4. (The layering was not yet very clear.) I chose to consider only the end-to-end connections between com-puters and not the packet switching nodes (ARPANET IMPs or Cigale nodes). This made it possible to look at end-to-end synchronisation problems without being exposed to artificial limitations proper to a specific network layer.

I then got in touch with the ARPANET teams. When Louis Pouzin hired me, he men-tioned his intent to have someone from the Cyclades project immersed in one ARPANET site. This was actually an ideal prospect.

Before joining Cyclades, I had spent three years at CERN, working in an inter-national environment and sharing my office with an English colleague. I wanted to face a stiffer challenge, from scientific and societal standpoints. So I toured the ARPANET centers in spring 1973. DARPA (Washington), BBN (Cambridge, MA), Stanford University (Palo Alto, CA), and UCLA (Los Angeles, CA) were on my list. Vint Cerf had just been appointed Professor at the Stanford’s Digital Systems Lab. When I met Vint, I knew I had made my choice: top-level scientific discussions and human contact. I had brought along my simulation results. Vint told me they were extremely use-ful, since NCP was to be replaced by some other protocols (TCP and IP were in the works). So I came to Stanford in June 1973, for a year, to work on synchronisation prob-lems, flow control, error control, and abnormal end-to-end connection failures.

Yes. Shortly after obtaining his PhD at UCLA, Vint started teaching at Stanford and was awarded DARPA funding. He quickly built his team (half a dozen PhD students and one or two engineers in charge of logistics, installation of the IMP, FTP and email software).

Yes, the financing was funnelled through DARPA, coming from the Department of Defense. In the late 1960s, encouraged by the work by Leonard Kleinrock (then at MIT) and Rand Corporation, DARPA launched the ARPANET project. Later on, when it became obvious that packet-switching was opening up tremendous opportunities, the National Science Foundation and the computing industry joined forces with DARPA. TCP/IP became a commodity technology in 1983, when DARPA decided to put the technology in the public domain.

During the ARPANET years, there was also some funding from DARPA awarded to non-US teams, which had connections to the ARPANET, the Norwegians notably. The British and the French had their own national budgets. Bringing in researchers has always been a DARPA and a US policy. To the best of my knowledge, ARPANET’s developments were not classified. There might have been some confidentiality clauses or patents related to the work conducted by BBN or a few ARPANET teams.

Yes. CERN is a highly international organisation. But it is huge, and I was hired to join a team already structured, and wasn’t really asked to be innovative.

The big difference with Stanford was that over there I could really bring something new. The whole of Vint’s team was constantly brainstorming, investigating fundamental questions regarding time-varying and error-prone inter-process communication. In fact, without knowing it at that time, we were uncovering that terra incognita which went by the name of “distributed computing” years later. To some extent, we were computer scientists learning that our discipline had been so far focused on a special case, centralised systems, and that the time had come for a mental revolution akin to relativistic physics: the hypothesis according to which processes share the same space/time referential should be abandoned. In distributed systems, there is no central locus of control. Some “global state” being necessary for endow-ing any system with desired properties, specific (distributed) algorithms had to be devised to this end.

That is right. TCP was being designed, and the famous RFCs addressed fundamental questions. The foundations had been laid. A specific example comes to mind. If I remember well, it is Ray Tomlinson who, with the alternating bit protocol, proposed a primitive version of the sliding window scheme on which I was working. The hypothesis that there may be only one packet in transit had been abandoned, and we had to find a scheme whereby multiple packets in transit on a full-duplex connection could be designated unambiguously in the absence of a “natural” time/frame referential and in the presence of failures (packets had to be repeated, which raised the need to know whether any given packet had previously been received or whether it was a new one). To put it simply, network protocols in the ARPANET age were posing problems of cardinality 2 (end-to-end), whereas distributed algorithms are needed for solving problems of any cardinality greater than 2.

As far as I was concerned, besides my work on TCP, I had total freedom. For example, while in Vint’s team, I became interested in the work conducted in nearby Xerox PARC, which led to Ethernet. Also, I was reading papers on the ring developed at Irvine University (CA). Both topics had a definite influence on my work related to real-time local area networks. PhD students were offered a choice, among the themes selected by Vint. Presentations were organised on a weekly basis. Everyone picked a theme according to his desire, in connection to open problems or published papers. We were looking for solutions, and there was some kind of scientific competitive spirit in the air, as well as the conviction that we could expose any “crazy ideas and get immediate feedback. This kind of “thinking” was specific to the birth of Silicon Valley.

The sharing of speculative inroads is specific to research. In the USA, it was easier than elsewhere to engage massive research efforts that would attract attention, bringing in the best researchers. Awareness of emerging topics was not widespread in France at that time. When I returned to France in 1974, I started teaching a course on computer networks at the University of Rennes. In 1977, one of the professors at that university had still not understood much of it. His vision was that “issues of bits per second, message loss rates, and reliable message deliveries are not related to computing, but to physics.” Clearly, there was a huge cultural gap between French and US universities at that time.

I was honest. I had promised Louis Pouzin I would come back to the Cyclades team, even if the temptation was great indeed to stay in the USA. We were offered golden job opportunities in those early days of emerging Silicon Valley.

I published a journal paper based on my work in France first and at Stanford four years after I left Stanford. I was not very interested in publishing on past work, and I was deeply invested in my research on distributed algorithms.

In the USA, I saw and learned how to conduct disruptive research: don’t follow the crowd, think by yourself, and when you are confident enough in your findings, take your responsibilities and publish/propagate, even if you break away from dominant or trendy topics.

Yes. Unfortunately I was unable to attend the celebration that took place on this occasion in July 2005.

Indeed. What had I brought into my nets? Layered architectures, the importance of properly separating physics (transmissions, links, computers) and virtual/logical structures (connections, processes, system models). In 1977, I published a pioneering paper on distributed computing (a distributed algorithm for fault-tolerant mutual exclusion, based on the concept of a virtual ring). I brought back information on how routing would be done in the future Internet. And knowledge relative to what would become an area of very high visibility: local area networks.

Europeans who immersed themselves in ARPANET teams? I do not remember, but there were significant contributions by Europeans. The idea of layered protocols was adopted by everyone and led to the OSI project at the International Organization for Standardization, in which the foreign community was mobilised. I had a lot of discussions with Hubert Zimmermann on the layered model,but I quickly gave up because I was more interested in my more theoretical research program. One of the members of Cyclades, Jean Le Bihan, proposed in 1978 that I should join the Sirius project he was conducting on distributed data-bases, which I did, for I could then work on distributed algorithmic issues such as concurrency control and the serializability theory.

As far as OSI was concerned, the problem was closed for me. And I have a personal bias: I hate standards committee meetings. I think they take too long to achieve convergence and, very often, good ideas come from the outside. So many would-be standards have been dis-carded only a few years after they became official! John McQuillan said something I liked very much in the 1980s: “Standards are great, there are so many you can choose from”! Standards rest on human-centric agreements, which explains why some standards are flawed, notably those resting on “solutions” that violate impos-sibility results. Current standards for inter-vehicular cooperative schemes based on wireless radio communications aimed at autonomous/automated vehicles are an example – terrestrial and aerial autonomic vehicular networks are my current area of research.

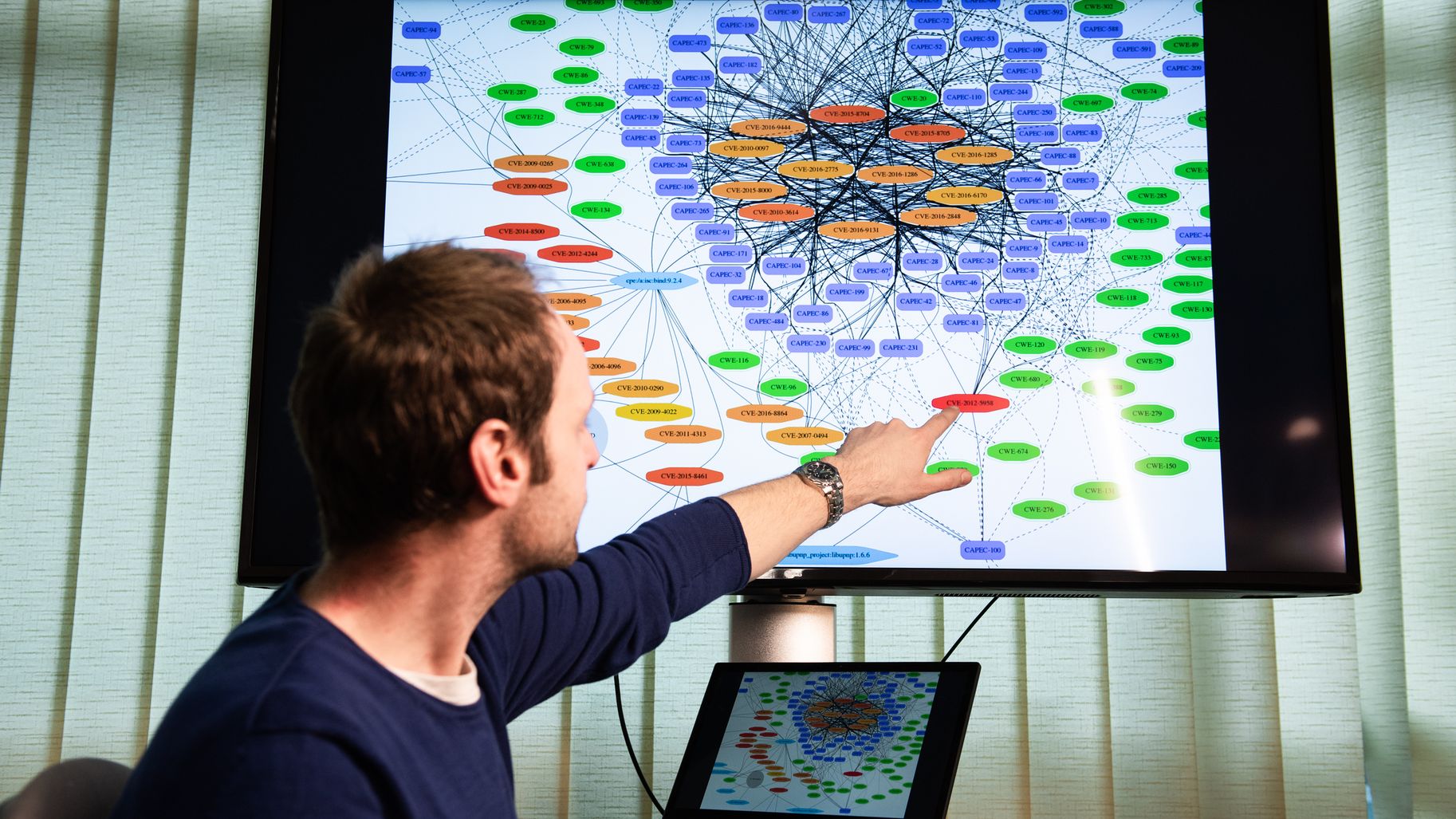

Cyberattacks, privacy threats and illegitimate cyber-espionage were not foreseen at that time. We had the firm conviction that we were developing a tool that would serve humanity, and no one really thought it could be misused. There are no encryption algorithms, no pseudonym schemes in the ARPANET protocols’ “DNA.” These issues have gained higher visibility since the advent of mobile wireless networking. We know that we are vulnerable in cyberspace via our smartphones. Matters are much more worrying with upcoming “connected” automated vehicles. With currently adopted solutions, these vehicles are equivalent to smartphones-on-wheels, making us vulnerable in the physical space: smartphones-on-wheels may kill.

Gerard Le Lann: Today, everything runs on TCP/IP, Internet would not have existed without ARPANET (and external contributions, notably from France and the UK), and Internet is not dead, contrary to recurrent dogmatic “predictions”! More than anything else, packet-switching has been THE crucial revolutionary technology, experimented first with ARPANET. Without packet-switching, none of those cyber-services we rely on daily nowadays, none of those technologies that have emerged recently (e.g. IoT) would have come into existence.

Yes indeed, Bob Metcalfe had outlined the idea of a future Enernet, an open network based on energy packet-switching, where energy packets could be produced, disseminated, traded, and consumed anywhere, anytime. The future will tell whether something close to Enernet can be deployed. We have many challenges to think about. In the list of new ground-breaking technologies, one finds mobile optical communications—passive optics and active optics (visible light commu-nications). They can serve to solve fundamental problems left open with radio technol-ogies. Just one quick example: naming in spontaneous open mobile networks, where no advance knowledge of neighbours, membership, geolocations or velocities, is avail-able. Optical communications also have a decisive advantage over radio communica-tions: they can be privacy-preserving by design. As regards personal data protection, with the GDPR,29 the Europeans are ahead this time, I believe.

Gérard Le Lann graduated in Applied Mathematics from the University of Toulouse and as an engineer from ENSEEIHT (Toulouse). He then obtained a PhD in mathematics, specialising in information technology, from the University of Rennes. He began his career at CERN in 1969.

In 1972 he joined Iria (now Inria) to participate in the Cyclades pilot project led by Louis Pouzin, whose goal was to create a computer network capable of competing with the ARPANET network being established at that time in the United States.

At the University of Rennes, he created the first team to work on computer networks. At Stanford University, following his work on simulating network protocols, he worked with Vinton Cerf on designing the TCP/IP protocols that have since been used for data transfer over the Internet.

On his return to Iria, he published a seminal paper on fault-tolerant distributed algorithms in 1977. He was appointed Director of Research in 1978. During the 1980s, he published significant research findings on distributed databases and real-time systems, which, in addition to scientific partnerships, led to numerous collaborations with industry and national and supranational bodies (most notably the French Government Defence Agency and the European Space Agency). Evidence of this includes the use of the “deterministic Ethernet” patent in both the civil and defence fields, and the three-year provision, free of charge, of computers to Rocquencourt by Digital Equipment Corporation (DEC), a first in Europe for DEC. In addition to real-time distributed algorithms, his work then focused on evidence-based systems engineering and critical systems. An Emeritus Research Director since 2008, he is currently working on automated and interconnected vehicular networks, for which he has proposed cyberphysical solutions ensuring both safety (almost no accidents) and cybersecurity (no cyber-espionage and immunity to cyberattacks).

In 2012, he received the Willis Lamb Award from the French Academy of Sciences.