Interview with Lauren Thevin : using virtual and augmented reality to help people with visual impairment

Date:

Changed on 09/04/2025

I did a degree in Applied Mathematics and Social and Human Sciences, and then went to study at the School of Cognitive Engineering (ENSC) in Bordeaux. Then I did my thesis in Computer Science on computer-supported human-human collaboration, applied to emergency response management, at the University of Grenoble. The aim was to train using "disaster" scenarios, such as flooding, and analyse how people behave in relation to the predefined procedures. For example, that involved checking whether people evacuated the right areas and, at the same time, assessing whether what was set down in the emergency response plan was appropriate to the situation, whether evacuation was planned in the right areas. I then spent six months working on UX design - user experience design - at an automotive company.

I'm a post-doctorate researcher, carrying out research on augmented reality and virtual reality so that they can be used by visually-impaired people.

The idea is to design tools that make use of senses other than sight, such as hearing and touch, and then see how these methods might be used in the ‘real world’.

At the moment, I'm working on a mixed reality simulator to teach young people at the CSES Alfred Payrelongue special education centre for the visually-impaired to cross the road. To begin with, they learn how to analyse a junction in a safe environment, with no cars, thanks to virtual reality, before going on to learn how to operate in a real-world situation.

My presentation is about non-visible interfaces (meaning "transparent" interfaces, and other types of interfaces that are not strictly-speaking graphical interfaces), in other words, all forms of interaction that do not rely on a computer screen: "Invisible interfaces, the future of interfaces?"

Invisible interfaces raise two questions:

If we fail to address all these alternatives to sight, we will effectively be excluding the 10% of the population who are visually-impaired…

Lauren Thevin will give her presentation on Friday, 22 June, at 2 pm in the Grande Hall at La Villette.

I'll be talking about the issue of equal access to technology. Today, we have many assistance technologies that make inaccessible content accessible thanks to dedicated tools: thus, content that is not initially appropriate becomes "compatible" with impairment, for example, by providing audio-tactile feedback as an alternative to visual media. Inclusive technologies make content accessible for people who have an impairment as well as for people who do not. That opens up access for the collaborative use of a technology, but it does not guarantee it.

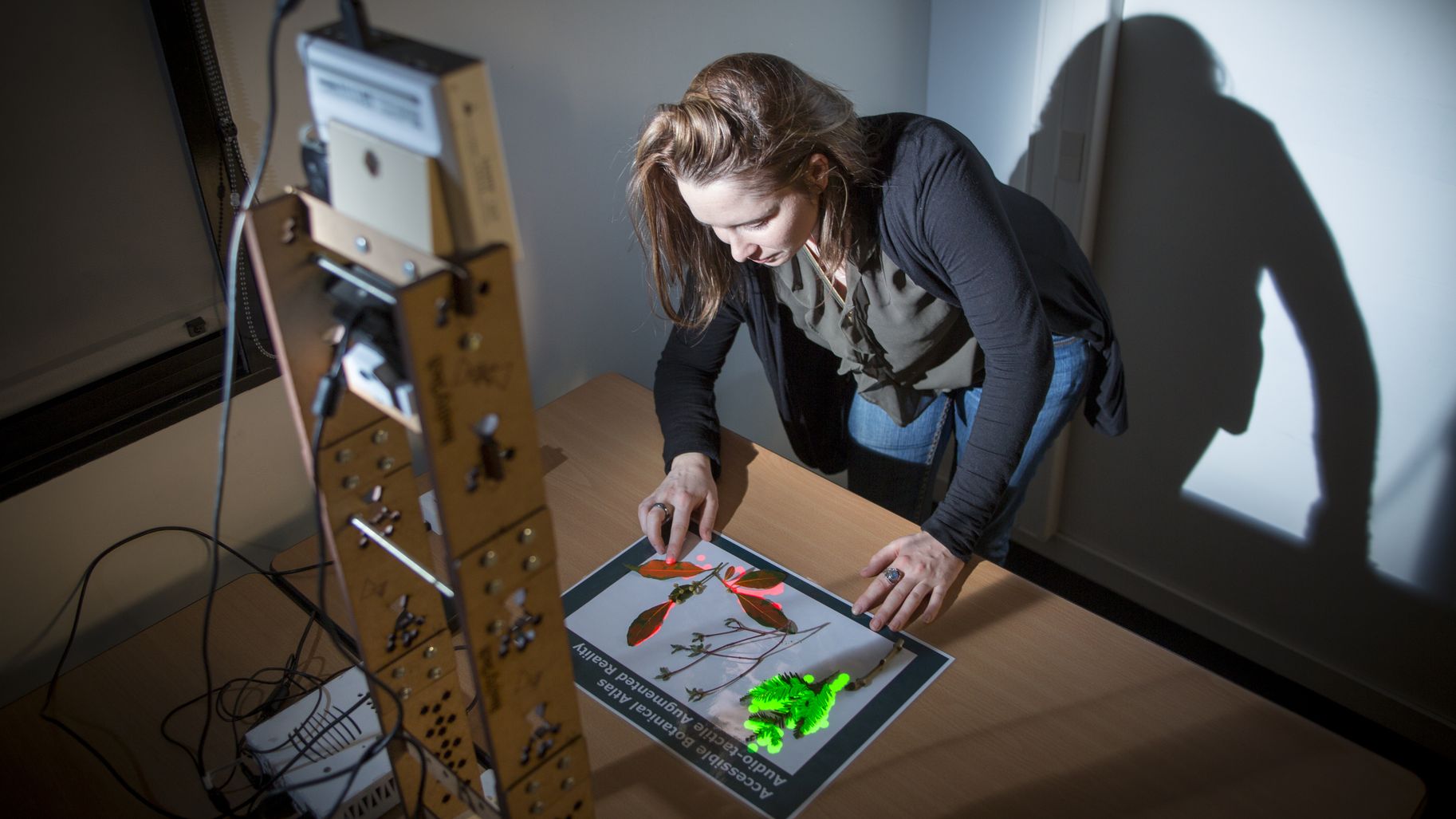

Audio-tactile augmented reality makes it possible for visually-impaired people to use digital media.

I'll be showing an accessible interactive map designed, developed and tested in partnership with the Regional Institute for the deaf and blind (IRSA) in Bordeaux, to help visually-impaired people to explore and learn geographical maps. The first version of this map uses augmented reality to augment a tactile map and make it interactive (tactile interaction) and provides speech synthesis to describe features on the map, albeit within certain limitations. For example, a visually-impaired person finds it hard to know which part of the map they have already explored, which is why we provide a speech synthesis function to inform the visually-impaired user whether or not they have explored all parts of the map, and if they haven't, then it tells them in which direction to move to find the closest unexplored element (up-down/left-right). This function has been improved to enable the teachers to tell the computer system which feature is the next to be explored by the student. This project is the first step toward developing an accessible and inclusive collaborative application with personalised information feedback for all users, without any interference.