Exploring the dark side of the universe

Date:

Changed on 10/05/2023

Many of us are fascinated by the dark side of the moon. But the universe also has a dark side, which many scientists are seeking to uncover. This is the aim of an ambitious, multidisciplinary international research programme harnessing expertise in IT, mathematics and cosmology. The project has been coordinated since 2015 by Bruno Lévy, Inria director of research, Roya Mohayaee, CNRS research fellow at the Paris Astrophysics Institute (Sorbonne University - CNRS), and Sebastian von Hausegger, a researcher at the University of Oxford. They were recently joined by Ravi Sheth and Farnik Nikakhtar, two experts in astronomy and physics from the USA. Sheth is a teacher-researcher at the University of Pennsylvania, while Nikakhtar is a postdoctoral researcher at Yale University.

“Mathematics is the language we all share”, explains Bruno Lévy. “It is a powerful language for describing what goes on in the universe, including the principles of physics discovered through cosmology” (the branch of physics dealing with the universe and its history). The IT research, meanwhile, is aimed at inventing new algorithm, that is, making it easier for computers to translate the physics equations and formulas which need to be calculated. “That’s the magic wand that breathes life into mathematical formulas, making it possible to simulate the laws of physics digitally”, explains the director of research.

The first major discovery made as part of the project came during the summer of 2022. This was reported in an article in the journal Physical Review Letters, before being featured in the prestigious magazine Physics.

This innovation, which has been compared to a time machine, is centred around a mathematical method which uses optimal transport theory to recreate the trajectories of stars and galaxies using a 3D map of the cosmos. “We wanted to see what the universe looked like billions of years ago, and we managed the first steps, using simplified examples and a limited set of data”, explains Bruno Lévy.

The team then set about improving their method, making the calculations more powerful and testing its resistance to incomplete or non-measurable parameters. “You have to remember that a certain proportion of galaxies have not been observed”, explains Bruno Lévy.

The question of dark matter becomes even more complicated.

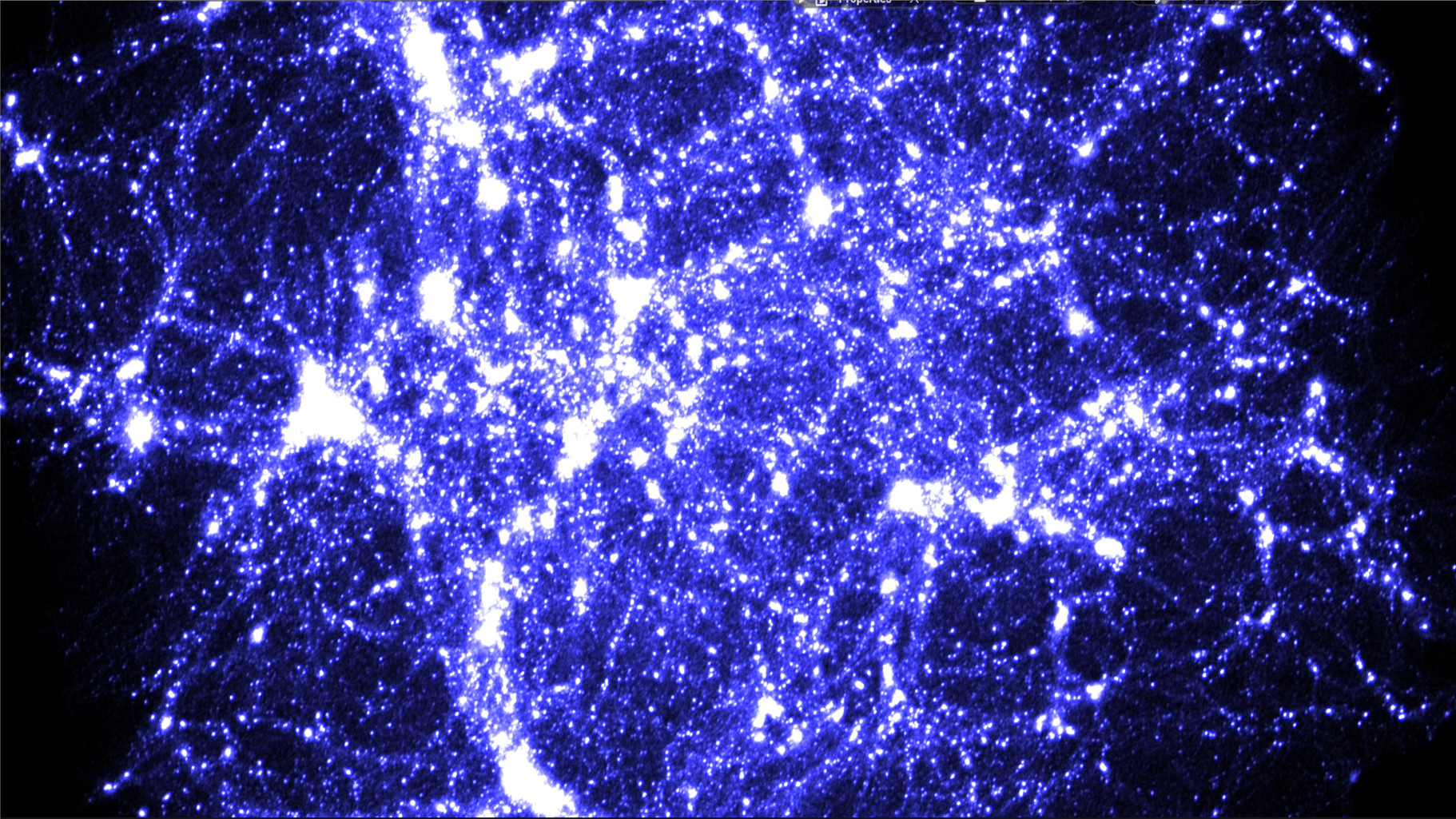

The term “dark matter” is used to refer to a set of effects that were first observed in the early 20th century by Vera Rubin and others. But no one has yet managed to actually observe dark matter, to identify what it is made of or to characterise it as a modification to the laws of gravity. The same goes for dark energy, which we don’t know either the source or the nature of, but whose existence was confirmed in the late 1990s by the American-Australian researchers Perlmutter and Riess: it has been responsible for the accelerating expansion of the universe over the last few billion years.

In a new paper published in late 2022 in Physical Review Letters, researchers from the international team made the case for the mathematical tool which they designed for real data, where there is a level of uncertainty and incomplete data. They were able to show that their method was capable of reproducing useful information from incomplete data. Long-term, their tool will make it possible to test different theories on dark matter and dark energy in the form of mathematical equations. The aim will be to bring these equations face to face with reality, assessing how coherent they are when observation data is input.

The challenge now will be to adapt this mathematical method to an ever-growing multitude of observational data fed back from powerful telescopes. “We have been able to make calculations on 300 million points and hope soon to be able to go beyond one billion points”, says Bruno Lévy. “The long-term aim is to apply our algorithm to actual observational data, including data from the DESI project” (Dark Energy Spectroscopic Instrument), adds Farnik Nikakhtar, postdoctoral researcher at Yale University. As this specialist explains, with more than a hint of excitement, it is expected that this project will be capable of observing “40 million galaxies and quasars” by the end of the decade.

In the future, either a bigger computer or a network comprising a great many computers will be needed to process such data deposits. “For these enormous simulations, we can either use brute force, and run the algorithm on a supercomputer (like Jean Zay), or we can take a smarter approach and replace the supercomputer with a ‘super-algorithm’”, explains Bruno Lévy. This will involve coming at the method from a new angle in order to find a “mathematical shortcut” capable of arriving at results more quickly. Long-term, this should make it much easier for researchers in cosmology to make calculations using their own computers, without the need for rare and expensive supercomputers.

Verbatim

For me, the most exciting part of our results is that we can now accurately determine the shape of the initial patches of space from which dark matter halos and galaxy clusters formed. This ability is particularly thrilling because it has the potential to enhance our understanding of the connection between dark matter and visible matter, which has been a longstanding enigma in astrophysics.

Auteur

Poste

Postdoctoral researcher at Yale University, having completed a PhD in physics and astronomy at the University of Pennsylvania in 2022

A numerical algorithm for L2 semi-discrete optimal transport in 3D, Bruno Lévy, Institut Fourier, 30 juin 2015