Learning algorithms: multi-armed bandits at the heart of a Franco-Japanese associate team

Date:

Changed on 05/02/2025

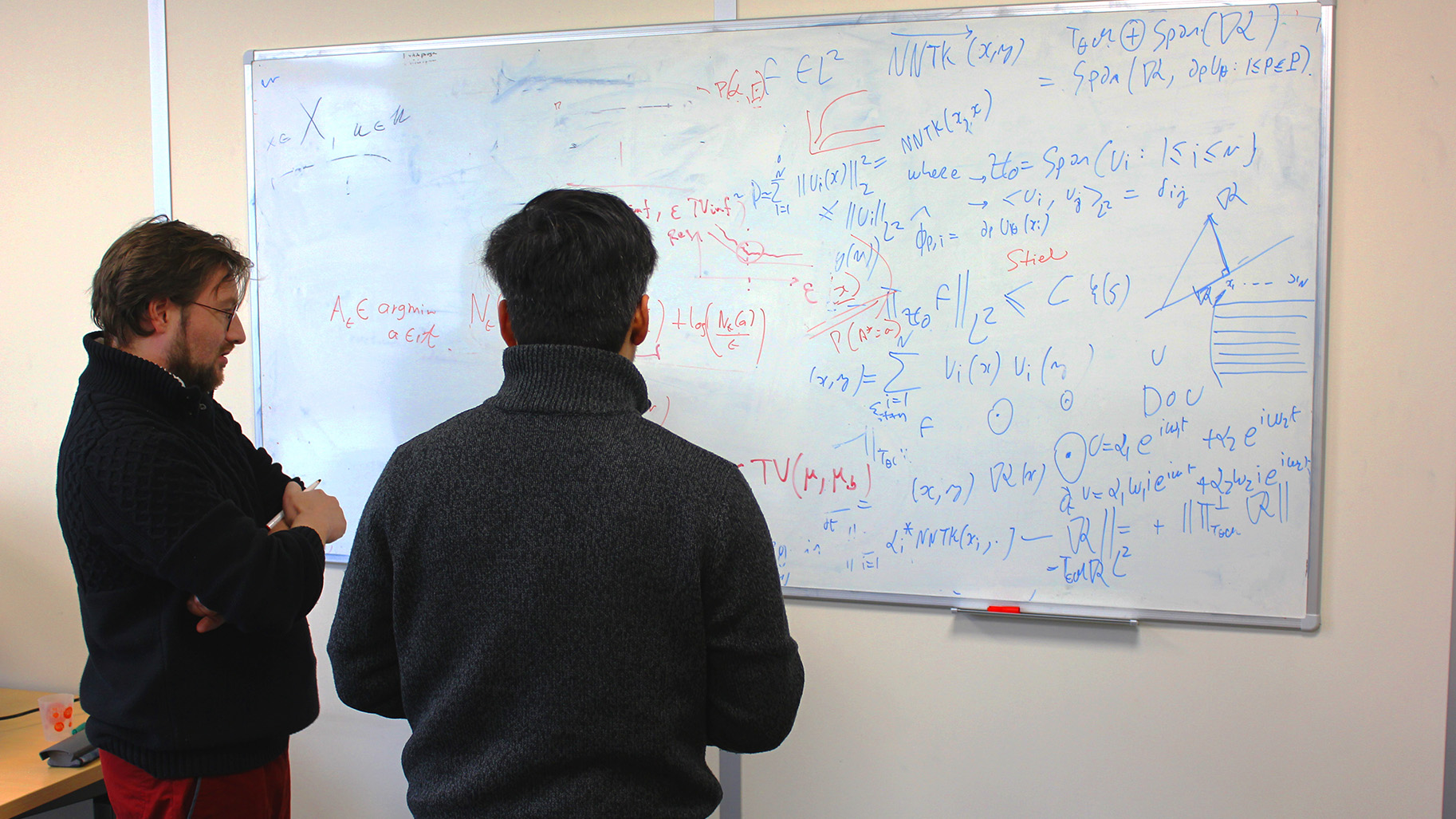

"As academic researchers, we are fortunate to have an opportunity to lay theoretical foundations that will enable us to address the key societal issues central to tomorrow's industries,” enthuses Odalric-Ambrym Maillard, Inria Researcher and coordinator of the Reliant associate team in France. Reinforcement learning is one of these key topics, and is at the heart of Reliant's activities. For three years, scientists from the Scool project team (involving the Inria Centre at the University of Lille and the CRIStAL laboratory) and Kyoto University, Japan, have been working on this topic – and more specifically on “multi-armed bandits”.

This theory, which can be used to produce predictive algorithms, is confronted with a major challenge: the difference between its theoretical results and those obtained when it is applied to actual cases. "Our aim was therefore to test our algorithms in the real world, in order to overcome the obstacles to their application,” summarises the researcher.

The team led by Junya Honda, Associate Professor at Kyoto University, is also at the forefront of fundamental research on reinforcement learning. “We shared a desire to apply our theoretical work to the real world, rather than using simulators," he explains. “In 2019, I therefore invited Odalric-Ambrym Maillard to give a talk on the latest developments concerning multi-armed bandits.” On this occasion, the two researchers forged links, with the prospect of arranging a collaborative project. This is how the idea of the Reliant associate team came about.

The term “multi-armed bandits” refers to slot machines. In the field of probability, this is an optimisation problem: when presented with several machines, we want to determine which one is the most profitable. The method used to do this is based on striking a balance between exploration and exploitation. Firstly, several machines are tested to find out which ones are the most profitable, and then the winnings are optimised on the basis of the knowledge acquired.

This is a simplified case of reinforcement learning, where an “agent” learns to make decisions by trying out different actions and observing what happens. Each action results in a reward indicating whether or not the agent has acted correctly. The agent gradually adjusts its strategy to choose the actions that will bring it the greatest returns over the long term. The aim is to minimise “regret”, i.e. the difference between the reward obtained and the reward that would have been obtained by choosing the best option at each stage.

Algorithms designed in this way can be used to make decisions in a wide range of situations. “This method is particularly appropriate in medicine," says Odalric-Ambrym Maillard. “But in this context, there is no human simulator to train the algorithms. We therefore need to compare theory with medical reality.” Let's take the example of a project carried out with the hospital in Lille, where post-operative follow-up requires decisions to be made for patients: at what moment should they be asked to return for a consultation? Which specialist should they see?

These questions, in a reinforcement learning context, run into a number of difficulties: very small sample sizes (in this case, the number of patients) and “risk aversion” (the desire to avoid a negative impact), as well as “non-parametric” situations, respect for privacy and data corruption. The Reliant associate team has demonstrated how to optimise algorithms despite these constraints, which has never been achieved before.

Image

Verbatim

One of the major difficulties concerns the gap between the theory and the actual behaviour of algorithms with very little data, as is the case in medicine. Our team has therefore set out to determine the solution with the best guarantee of accuracy in a limited number of tests.

Auteur

Poste

Associate Professor at Kyoto University

At Inria, the scientists have examined non-parametric cases, i.e. where it is impossible to model all the intervening parameters. “Our case study concerned agro-ecology," explains Odalric-Ambrym Maillard. “The aim is to determine the best agricultural practices out of some ten strategies."

Image

Verbatim

We have compared our algorithms with simulators used to predict parameters such as yield, soil fertility and harvest quality. Our methods based on multi-armed bandits have produced better results than the usual experiments carried out by agronomists.

Auteur

Poste

Inria Researcher

This work has led to the development of optimal multi-armed bandit algorithms in a non-parametric framework. A first!

Privacy is another challenge, particularly in medicine. During their learning process, algorithms are likely to highlight variables that may help to identify patients. Introducing a confidentiality requirement therefore adds an additional constraint to the problem, and alters the performance of the algorithms. This new research now enables scientists to predict the theoretical performance achievable with this constraint, and thereby determine the best algorithm.

A final difficulty has been investigated: while data derived from simulations remains “clean”, data from actual cases may be “corrupted”. In other words, it is not unusual to encounter outliers, such as when a patient's diabetes disrupts the outcome of a treatment, or when the arrival of an invasive species affects the quality of harvests. The results obtained by Reliant have enabled progress to be made in understanding these cases, with a view to transforming the algorithms and making them more robust.

“The work carried out in the framework of Reliant has taught us a lot about the importance of these different factors in multi-armed bandit problems," sums up Junya Honda. “Thanks to this partnership, significant strides have been made in algorithm application research.” The associate team’s output over these three years has indeed been prolific, as can be seen from the many publications produced by Reliant's six permanent researchers and their students.

All these results open up new perspectives: “As we confront the multi-armed bandits with reality, new questions arise," concludes Odalric-Ambrym Maillard. “We have currently identified around twenty challenges and are working on resolving them. And there will definitely be more to come!”

The "associate team" programme is part of Inria's international policy. Its objectives are to support bilateral scientific collaborations while promoting and strengthening Inria's strategic partnerships abroad. Funded for a three-year period, an associate team carries out a joint research project involving an Inria project team and a research team abroad.

The two partner teams jointly define a scientific objective, a research plan and a schedule for bilateral student exchanges. “It's important for PhD researchers to discover different research environments,” asserts Odalric-Ambrym Maillard, an Inria researcher. “Generally speaking, meeting our counterparts creates synergies and facilitates the emergence of new projects.”

The Reliant associate team has proved successful: "Our two entities were already bringing together world-class experts on the topics of reinforcement learning and multi-armed bandits. Pooling our complementary expertise has significantly accelerated our respective research," explains the French researcher. Junya Honda, Associate Professor at Kyoto University, adds: "Together, we have found a way to bridge the gap between the theoretical and actual performance of multi-armed bandit algorithms. It's a real innovation!”

The joint activities and scientific discussions within Reliant have enabled the development of tools that each team can use in its own country. And the fields of application are very varied: improving harvests by adapting crops to the environment, conducting clinical trials with the aim of dosing a drug in consideration of its side effects, and even identifying cancer cells under the microscope with the fewest possible measurements.